With 81% of candidates now using AI tools to navigate their careers, according to PARWCC, the question is no longer whether students use AI, but how you can ensure they don't use them to their own detriment.

AI has become the default first draft for resumes, cover letters, and interview prep.

Used correctly, it can sharpen structure and surface gaps. Used blindly, it produces generic, error-prone content that recruiters increasingly recognize and reject.

For career advisors, the risk is uncoached adoption.

This guide outlines the most common ways students misuse AI in job prep, how to help them refine AI-generated content into credible, human narratives, and the guardrails career centers need to protect authenticity and outcomes.

What are the biggest mistakes students make when using AI for job prep?

Students often treat AI as a "magic button," resulting in generic, robotic content that lacks the human nuance recruiters crave. According to the NACE 2025 Student Survey Report, while only one-third of seniors use AI, those who do primarily use it for cover letters (64.8%) and resumes (61.6%), often failing to verify "hallucinated" facts.

The "Copy-Paste Trap" is the most dangerous. Students frequently prompt AI to "write a resume for a marketing intern" without providing specific experiences.

This leads to hallucinations - where the AI invents credentials the student doesn't have.

Furthermore, Handshake's 2025 State of the Graduate report notes that applications per job have increased by 30%, meaning a generic AI resume is even more likely to be buried under a mountain of high-quality, personalized applications.

Common Misuse Indicators:

- The "Vague Verb" Syndrome: Using words like "leveraged," "facilitated," or "spearheaded" without any quantifiable data.

- Skill Over-Stacking: Listing every software mentioned in a job description despite only having "watched a tutorial" once.

- The Formatting Nightmare: Students often paste AI text into templates that break ATS (Applicant Tracking Systems) because of hidden formatting symbols.

Also Read: How to give resume feedback in 5 minutes?

How can I teach students to refine AI-generated content for better results?

Advisors should coach students to move from "Basic Prompting" to "Chain-of-Thought" engineering. Instead of asking AI to "write," ask it to "critique" or "structure" based on the STAR method (Situation, Task, Action, Result). According to the Vanderbilt University Prompt Engineering guide, using a "Persona Pattern" significantly improves output quality.

Coach your students to use a three-step refining process:

- Context Loading: Feed the AI the job description and the student's raw, messy notes first.

- The "STAR" Constraint: Explicitly tell the AI: "Rewrite these bullet points using the STAR method, focusing on a measurable 'Result' for each."

- The Critical Lens: Ask the AI: "What is missing from this resume that a recruiter in [Industry X] would look for?"

According to NACE AI Bootcamp experts, AI is most effective at pattern matching - identifying missing keywords, rather than writing the narrative itself.

Encourage students to use AI to find the "skill gaps" between their resume and the job description.

What guardrails should advisors set for resumes and interviews?

The primary guardrail is the "Human-in-the-Loop" requirement. While 76% of career centers now use AI as an assistive tool, according to NACE, advisors must emphasize that AI-generated content can actually lead to being screened out.

Practical Guardrails for Your Office:

- Privacy First: Ensure students never input personally identifiable information (PII) like Social Security numbers or home addresses into public LLMs. According to WestEd’s 2026 AI Guidelines, data once provided to public models cannot be retrieved.

- Resume "Uniqueness" Check: If a student’s resume sounds like every other applicant, it’s a fail. Set a rule: Every bullet point must contain a specific name of a project, software, or metric that an AI couldn't have guessed.

- Interview Authenticity: For interview prep, use AI-simulations to practice, but warn students against memorizing AI-generated scripts. As Cal State East Bay’s Career Empowerment Center notes, AI is for scaling practice, but the human connection is irreplaceable.

What does a good AI-to-Advisor edit look like?

An advisor-approved edit takes a "flat" AI statement and injects specific, verifiable evidence. AI is great at structure but terrible at "the truth." You must teach students to "interrogate" the AI's output. Below is an example of how to guide a student through an edit.

| Feature | Raw AI Output | Advisor-Refined Version |

|---|---|---|

| Resume Bullet | Collaborated with a team to improve social media engagement and managed accounts. | Increased Instagram engagement by 22% over 3 months by implementing a new video-first content strategy using Adobe Premiere Pro. |

| Cover Letter Hook | I am writing to express my strong interest in the Marketing Intern position at your esteemed company. | When I saw [Company Name]’s recent campaign on sustainable packaging, I recognized the exact type of data-driven storytelling I’ve been practicing at [University Name]. |

| Interview Answer | I am a hard worker who always meets deadlines and enjoys working in teams. | In my Junior year capstone, I managed a team of 5. When a member fell ill, I reallocated tasks using Trello to ensure we met our 24-hour deadline. |

According to PARWCC's 2026 Guide, employers are becoming more selective, seeking "very specific skills that deliver immediate value." The refined versions above show proof, whereas the AI version only shows claims.

Also Read: What are the top 5 career services benchmarks every center must track?

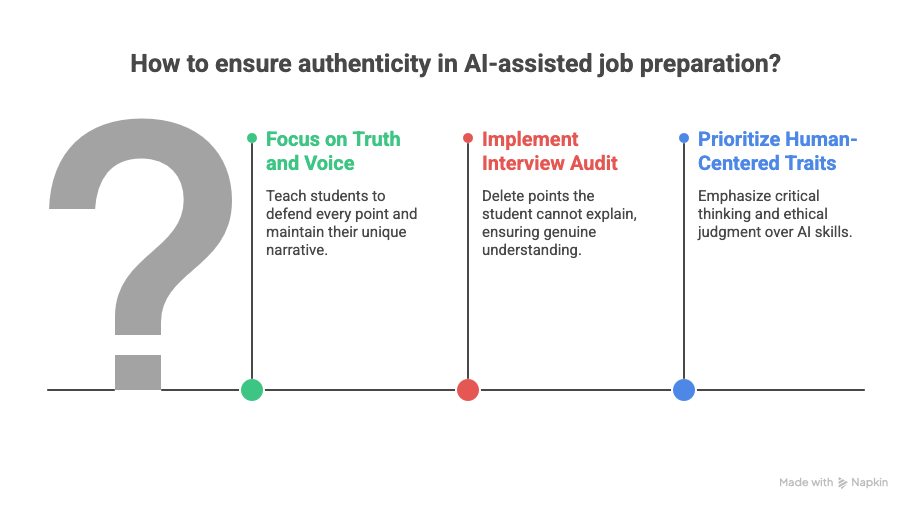

Where should we draw the line on AI-generated authenticity?

Draw the line at "Truth and Voice." Teach students that AI is a co-pilot for structure, not the author of their lived experience. If a student cannot verbally defend every bullet point during an interview, they have moved from efficiency to misrepresentation. Authenticity is a competitive advantage in a market flooded with synthetic content.

According to Jisc’s 2025 Student Perception Report, students too fear losing their identity through "homogenization of thought."

Counter this by implementing an "Interview Audit": if a student can't explain a point's how and why, delete it.

While AI is popular, NACE’s Job Outlook 2026 shows only 13.3% of employers prioritize AI skills. They value human-centered traits like critical thinking and ethical judgment, that bots cannot replicate.

Ensure students use AI to refine their narrative, not replace it. Authentic stories are the only ones that stick in 2026.

Also Read: How to build a skills first goal setting workshop?

Wrapping Up

AI isn’t going away and neither is the responsibility to guide students in using it well.

The career centers that will stand out are the ones that actively teach students how to question it, refine it, and ground it in real evidence and human judgment.

To do that at scale, advisors need systems that support coaching, not tools that replace it.

Hiration is built with this reality in mind, using AI to surface skill gaps, strengthen resume structure, and expand interview practice, while keeping counselors firmly in control.

In a market flooded with synthetic content, the most valuable outcome career centers can deliver remains unchanged: graduates who can clearly explain what they’ve done, why it matters, and how they add value.