“Good advising” is no longer enough.

In 2026, advisors are judged by measurable impact on retention, momentum, and career outcomes, not just policy knowledge or availability.

At the same time, nearly 40% of public four-year institutions anticipate budget cuts to student services, according to Tyton Partners’ Driving Toward a Degree 2025 report.

In this environment, strategic, tech-fluent advisors stand out.

An Advisor Self-Assessment Tool is a practical way to align your skills with what modern institutions now expect and to stay indispensable in a changing landscape.

Here’s how to structure one that reflects the realities of modern advising.

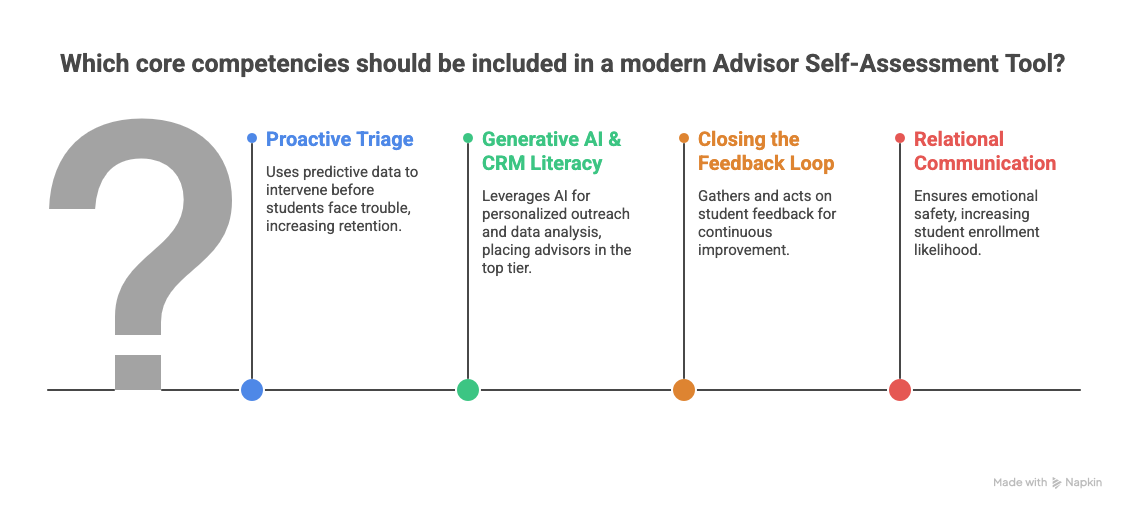

What core competencies belong in a modern Advisor Self-Assessment Tool?

An effective tool must evaluate communication, triage, feedback, and tech familiarity. While standard tools focus on "knowledge of policy," modern frameworks emphasize "Relational" and "Technological" skills. You should assess your ability to move beyond transactional course scheduling and into proactive, data-informed student coaching that directly impacts retention and career readiness.

To be "indispensable" in 2026, focus your self-assessment on these four non-generic pillars based on the NACADA Core Competencies Model:

- Proactive Triage: This isn't just making a referral. It’s the ability to use predictive data to intervene before a student knows they are in trouble. For example, according to Georgia State University’s GPS Advising results, using a system of 800+ alerts to "triage" student needs led to a 5-percentage point increase in freshman retention.

- Generative AI & CRM Literacy: Can you use GenAI to draft personalized outreach or analyze caseload trends? Surprisingly, only 11% of higher-ed admins/advisors say their student-success data is GenAI-ready, per Tyton Partners. Mastering this can put you in the top tier of CSPs.

- Closing the Feedback Loop: You must assess how you gather and act on student feedback. High-performing advisors at the University of South Florida use "Condition-based" assessments to move beyond simple "student satisfaction" surveys.

- Relational Communication: Evaluate your ability to handle "emotional safety." Students who feel emotionally safe are 16 percentage points more likely to stay enrolled, according to the 2024 Tyton Partners Listening to Learners report.

Also Read: How can career centers scale career preparation effectively with limited staff?

How should I structure a rating scale for authentic self-reflection?

Avoid the "1-to-5" trap. Instead, use a behavioral scale like "Beginning," "In-Between," and "Toward Mastery." This forces you to identify specific actions you can or cannot yet perform. Pair each rating with a "Reflection Prompt" that asks for evidence of a student outcome you directly influenced through that competency.

Instead of checking "Satisfactory" for communication, ask yourself these prompts based on the UC Berkeley Advising Competencies:

- The Scale:

- Emerging: I know the policy but struggle to explain the "why" to students.

- Proficient: I can navigate the CRM and handle typical triage needs.

- Mastery: I am a "power user" who trains others on tech-mediated advising and uses the CCRC SSIPP framework (Sustained, Strategic, Integrated, Proactive, Personalized).

2. The Prompts:

- “When was the last time I initiated contact with a ‘silent’ student before a registration deadline?”

- “Can I explain the career-readiness ROI of a liberal arts degree to a skeptical parent?” (Crucial, as only 30% of staff at private 4-years strongly agree their institution is worth the cost, per Tyton Partners).

Also Read: Advisor Development Frameworks for Advanced Student Success Teams

How do I turn assessment results into a professional development plan?

Apply the "70:20:10" model of professional development. Use your assessment gaps to allocate 70% of your growth to hands-on experience (e.g., leading a new workshop), 20% to social learning (e.g., peer coaching), and 10% to formal instruction (e.g., NACADA webinars). Align these goals with your institution’s specific retention KPIs.

Don't just "want to get better." Follow this structure:

- Identify the Gap: (e.g., "I struggle with data-driven triage.")

- Set the 70% Goal: According to NASPA’s Professional Competency Guidelines, the best way to grow is to take responsibility for a specific project. Ask to manage the "Early Alert" dashboard for your department for one semester.

- The Institutional Link: If your college is like most, they are facing a "staffing shortage" (over 70% of large publics report this, according to Tyton Partners). Frame your development as a way to automate lower-level tasks so you can handle higher caseloads more effectively.

Also Read: What are some career counseling techniques to ease student anxiety?

What do typical advisor strengths and gaps look like in today’s landscape?

Common strengths include high "Informational" knowledge (knowing the catalog), while common gaps include "Technological" and "Career-Alignment" competencies. Many advisors are excellent at answering "What class do I take?" but struggle with "What job can I get?" or "How do I use this CRM to predict student failure?"

- Real-World Example (Strength): Advisors at Arizona State University excel in "e-advising," using adaptive tools to increase pass rates in gateway courses from 84% to 88%.

- Real-World Example (Gap): A massive gap exists in "Safety and Belonging" conversations. While 41% of students think campus safety is a top topic to discuss, only 10% of advisors prioritize it, according to the Listening to Learners 2024 report.

- The Tech Gap: A significant divide exists between the availability of advanced advising technologies and their actual daily application. According to the 2025 EDUCAUSE Top 10 report, this bottleneck is primarily caused by a lack of "digital fluency" and institutional training rather than a lack of interest among staff. Without dedicated professional development to bridge this skills gap, advisors remain tethered to manual processes, missing opportunities to use data for proactive student support.

Also Read: What are the top 5 career services benchmarks every center must track?

Why is a quarterly self-review schedule the most effective approach?

The academic year moves too fast for annual reviews. A quarterly schedule - Early Fall, Pre-Spring, Mid-Spring, and Summer, allows you to adjust your competencies in real-time. For instance, Fall should focus on "Triage" skills, while Summer should focus on "Tech Familiarity" and "Curriculum Mastery" when student traffic is lower.

According to the NACADA Academic Advising Handbook, the first and last two weeks of any term are "peak triage." A quarterly schedule helps you prepare:

- Q1 (August - October): Focus on Relational skills and building rapport.

- Q2 (November - January): Focus on Informational skills (registration policies) and Feedback loops.

- Q3 (February - April): Focus on Career Alignment. (53% of students want to talk about career options, but only 60% of advisors feel it’s their primary role, per Tyton Partners).

- Q4 (May - July): Focus on Technological mastery and professional development planning.

Also Read: How can career services teams systematically identify and close student skill gaps in 2026?

Wrapping Up

A self-assessment only creates value if it translates into better student outcomes.

As advising grows more data-driven and career-aligned, the tools supporting that work matter.

Platforms that connect career assessments, resume optimization, interview simulation, and advisor-facing analytics in one ecosystem reduce fragmentation and free up cognitive bandwidth for higher-impact conversations.

Hiration’s full-stack career readiness suite is built with that integration in mind.

From AI-powered student tools to a dedicated Counselor Module for managing cohorts, workflows, and insights, it supports modern advising within a secure, FERPA- and SOC 2-compliant environment.

The goal isn’t more technology. It’s clearer signals, stronger interventions, and advisors who can operate at their highest level.